General and Experimental Psychology

People

Publications

Research

Teaching

Colloquium

Guestbook

Jobs

News

Research group

Perception and Action

Graduate school

NeuroAct

Emmy-Noether-Group

Sensory-Motor Decision-Making

|

Currently at the University of California, Berkeley.

|

|

Education

Research Interests

Refereed journal papers

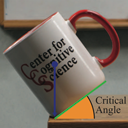

Cholewiak, S. A., Fleming, R. W., & Singh, M. (2015). Perception of physical stability and center of mass of 3-D objects. Journal of Vision, 15(2), 1-11. doi: 10.1167/15.2.13

AbstractHumans can judge from vision alone whether an object is physically stable or not. Such judgments allow observers to predict the physical behavior of objects, and hence to guide their motor actions. We investigated the visual estimation of physical stability of 3-D objects (shown in stereoscopically viewed rendered scenes) and how it relates to visual estimates of their center of mass (COM). In Experiment 1, observers viewed an object near the edge of a table and adjusted its tilt to the perceived critical angle, i.e., the tilt angle at which the object was seen as equally likely to fall or return to its upright stable position. In Experiment 2, observers visually localized the COM of the same set of objects. In both experiments, observers' settings were compared to physical predictions based on the objects' geometry. In both tasks, deviations from physical predictions were, on average, relatively small. More detailed analyses of individual observers' settings in the two tasks, however, revealed mutual inconsistencies between observers' critical-angle and COM settings. The results suggest that observers did not use their COM estimates in a physically correct manner when making visual judgments of physical stability.

Denisova, K., Kibbe, M. M., Cholewiak, S. A., & Kim, S.-H. (2014). Intra- and intermanual curvature aftereffect can be obtained via tool-touch. IEEE Transactions on Haptics. doi: 10.1109/TOH.2013.63

AbstractWe examined the perception of virtual curved surfaces explored with a tool. We found a reliable curvature aftereffect, suggesting neural representation of the curvature in the absence of direct touch. Intermanual transfer of the aftereffect suggests that this representation is somewhat independent of the hand used to explore the surface.

Pantelis, P. C., Baker, C. L., Cholewiak, S. A., Sanik, K., Weinstein, A., Wu, C.-C., Tenenbaum, J. B., & Feldman, J. (2014). Inferring the intentional states of autonomous virtual agents. Cognition, 130, 360-379. doi: 10.1016/j.cognition.2013.11.011

AbstractInferring the mental states of other agents, including their goals and intentions, is a central problem in cognition. A critical aspect of this problem is that one cannot observe mental states directly, but must infer them from observable actions. To study the computational mechanisms underlying this inference, we created a two-dimensional virtual environment populated by autonomous agents with independent cognitive architectures. These agents navigate the environment, collecting "food" and interacting with one another. The agents' behavior is modulated by a small number of distinct goal states: attacking, exploring, fleeing, and gathering food. We studied subjects' ability to detect and classify the agents' continually changing goal states on the basis of their motions and interactions. Although the programmed ground truth goal state is not directly observable, subjects' responses showed both high validity (correlation with this ground truth) and high reliability (correlation with one another). We present a Bayesian model of the inference of goal states, and find that it accounts for subjects' responses better than alternative models. Although the model is fit to the actual programmed states of the agents, and not to subjects' responses, its output actually conforms better to subjects' responses than to the ground truth goal state of the agents.

Cholewiak, S. A., Fleming, R. W., & Singh, M. (2013). Visual perception of the physical stability of asymmetric three-dimensional objects. Journal of Vision, 13(4), 1–13. doi: 10.1167/13.4.12

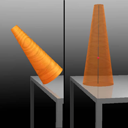

AbstractVisual estimation of object stability is an ecologically important judgment that allows observers to predict the physical behavior of objects. A natural method that has been used in previous work to measure perceived object stability is the estimation of perceived "critical angle"—the angle at which an object appears equally likely to fall over versus return to its upright stable position. For an asymmetric object, however, the critical angle is not a single value, but varies with the direction in which the object is tilted. The current study addressed two questions: (a) Can observers reliably track the change in critical angle as a function of tilt direction? (b) How do they visually estimate the overall stability of an object, given the different critical angles in various directions? To address these questions, we employed two experimental tasks using simple asymmetric 3D objects (skewed conical frustums): settings of critical angle in different directions relative to the intrinsic skew of the 3D object (Experiment 1), and stability matching across 3D objects with different shapes (Experiments 2 and 3). Our results showed that (a) observers can perceptually track the varying critical angle in different directions quite well; and (b) their estimates of overall object stability are strongly biased toward the minimum critical angle (i.e., the critical angle in the least stable direction). Moreover, the fact that observers can reliably match perceived object stability across 3D objects with different shapes suggests that perceived stability is likely to be represented along a single dimension.

Kocsis, M. B., Cholewiak, S. A., Traylor, R. M., Adelstein, B. D., Hirleman, E. D., & Tan, H. Z. (2013). Discrimination of real and virtual surfaces with sinusoidal and triangular gratings using the fingertip and stylus. IEEE Transactions on Haptics, 6 (2), 181-192. doi: 10.1109/TOH.2012.31

AbstractTwo-interval two-alternative forced-choice discrimination experiments were conducted separately for sinusoidal and triangular textured surface gratings from which amplitude (i.e., height) discrimination thresholds were estimated. Participants (group sizes: n = 4 to 7) explored one of these texture types either by fingertip on real gratings (Finger real), by stylus on real gratings (Stylus real), or by stylus on virtual gratings (Stylus virtual). The real gratings were fabricated from stainless steel by an electrical discharge machining process while the virtual gratings were rendered via a programmable force-feedback device. All gratings had a 2.5-mm spatial period. On each trial, participants compared test gratings with 55, 60, 65, or 70 μm amplitudes against a 50-μm reference. The results indicate that discrimination thresholds did not differ significantly between sinusoidal and triangular gratings. With sinusoidal and triangular data combined, the average (mean + standard error) for the Stylus-real threshold (2.5 +- 0.2 μm) was significantly smaller (p < 0.01) than that for the Stylus-virtual condition (4.9 +- 0.2 μm). Differences between the Finger-real threshold (3.8 +- 0.2 μm) and those from the other two conditions were not statistically significant. Further studies are needed to better understand the differences in perceptual cues resulting from interactions with real and virtual gratings.

Cholewiak, S., Kim, K., Tan, H., & Adelstein, B. (2010). A frequency-domain analysis of haptic gratings. IEEE Transactions on Haptics, 3(1), 3–14. doi:10.1109/TOH.2009.36

AbstractThe detectability and discriminability of virtual haptic gratings were analyzed in the frequency domain. Detection (Exp. 1) and discrimination (Exp. 2) thresholds for virtual haptic gratings were estimated using a force-feedback device that simulated sinusoidal and square-wave gratings with spatial periods from 0.2 to 38.4 mm. The detection threshold results indicated that for spatial periods up to 6.4 mm (i.e., spatial frequencies >0.156 cycle/mm), the detectability of square-wave gratings could be predicted quantitatively from the detection thresholds of their corresponding fundamental components. The discrimination experiment confirmed that at higher spatial frequencies, the square-wave gratings were initially indistinguishable from the corresponding fundamental components until the third harmonics were detectable. At lower spatial frequencies, the third harmonic components of square-wave gratings had lower detection thresholds than the corresponding fundamental components. Therefore, the square-wave gratings were detectable as soon as the third harmonic components were detectable. Results from a third experiment where gratings consisting of two superimposed sinusoidal components were compared (Exp. 3) showed that people were insensitive to the relative phase between the two components. Our results have important implications for engineering applications, where complex haptic signals are transmitted at high update rates over networks with limited bandwidths. Refereed conference papers

Pantelis, P., Cholewiak, S. A., Ringstad, P., Sanik, K., Weinstein, A., Wu, C.-C., & Feldman, J. (2011, July). Perception of intentions and mental states in autonomous virtual agents. In Cognitive Sciences Society 33rd Annual Meeting. Boston, MA.

AbstractComprehension of goal-directed, intentional motion is an important but understudied visual function. To study it, we created a two-dimensional virtual environment populated by independently-programmed autonomous virtual agents, which navigate the environment, collecting food and competing with one another. Their behavior is modulated by a small number of distinct "mental states": exploring, gathering food, attacking, and fleeing. In two experiments, we studied subjects' ability to detect and classify the agents’ continually changing mental states on the basis of their motions and interactions. Our analyses compared subjects’ classifications to the ground truth state occupied by the observed agent’s autonomous program. Although the true mental state is inherently hidden and must be inferred, subjects showed both high validity (correlation with ground truth) and high reliability (correlation with one another). The data provide intriguing evidence about the factors that influence estimates of mental state—a key step towards a true "psychophysics of intention."

Cholewiak, S. A., Tan, H. Z., & Ebert, D. S. (2008). Haptic identification of stiffness and force magnitude. In Proceedings of the 2008 Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (pp. 87–91). Washington, DC, USA: IEEE Computer Society. doi: 10.1109/HAPTICS.2008.4479918

AbstractAs haptics becomes an integral component of scientific data visualization systems, there is a growing need to study "haptic glyphs" (building blocks for displaying information through the sense of touch) and quantify their information transmission capability. The present study investigated the channel capacity for transmitting information through stiffness or force magnitude. Specifically, we measured the number of stiffness or force- magnitude levels that can be reliably identified in an absolute identification paradigm. The range of stiffness and force magnitude used in the present study, 0.2-3.0 N/mm and 0.1-5.0 N, respectively, was typical of the parameter values encountered in most virtual reality or data visualization applications. Ten individuals participated in a stiffness identification experiment, each completing 250 trials. Subsequently, four of these individuals and six additional participants completed 250 trials in a force-magnitude identification experiment. A custom-designed 3 degrees-of-freedom force-feedback device, the ministick, was used for stimulus delivery. The results showed an average information transfer of 1.46 bits for stiffness identification, or equivalently, 2.8 correctly-identifiable stiffness levels. The average information transfer for force magnitude was 1.54 bits, or equivalently, 2.9 correctly-identifiable force magnitudes. Therefore, on average, the participants could only reliably identify 2-3 stiffness levels in the range of 0.2-3.0 N/mm, and 2-3 force- magnitude levels in the range of 0.1-5.0 N. Individual performance varied from 1 to 4 correctly-identifiable stiffness levels and 2 to 4 correctly-identifiable force-magnitude levels. Our results are consistent with reported information transfers for haptic stimuli. Based on the present study, it is recommended that 2 stiffness or force-magnitude levels (i.e., high and low) be used with haptic glyphs in a data visualization system, with an additional third level (medium) for more experienced users.

Cholewiak, S. A., & Tan, H. Z. (2007). Frequency analysis of the detectability of virtual haptic gratings. In Proceedings of the Second Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (pp. 27–32). Washington, DC, USA: IEEE Computer Society. doi: 10.1109/WHC.2007.57

AbstractAs haptics becomes an integral component of scientific data visualization systems, there is a growing need to study "haptic glyphs" (building blocks for displaying information through the sense of touch) and quantify their information transmission capability. The present study investigated the channel capacity for transmitting information through stiffness or force magnitude. Specifically, we measured the number of stiffness or force- magnitude levels that can be reliably identified in an absolute identification paradigm. The range of stiffness and force magnitude used in the present study, 0.2-3.0 N/mm and 0.1-5.0 N, respectively, was typical of the parameter values encountered in most virtual reality or data visualization applications. Ten individuals participated in a stiffness identification experiment, each completing 250 trials. Subsequently, four of these individuals and six additional participants completed 250 trials in a force-magnitude identification experiment. A custom-designed 3 degrees-of-freedom force-feedback device, the ministick, was used for stimulus delivery. The results showed an average information transfer of 1.46 bits for stiffness identification, or equivalently, 2.8 correctly-identifiable stiffness levels. The average information transfer for force magnitude was 1.54 bits, or equivalently, 2.9 correctly-identifiable force magnitudes. Therefore, on average, the participants could only reliably identify 2-3 stiffness levels in the range of 0.2-3.0 N/mm, and 2-3 force- magnitude levels in the range of 0.1-5.0 N. Individual performance varied from 1 to 4 correctly-identifiable stiffness levels and 2 to 4 correctly-identifiable force-magnitude levels. Our results are consistent with reported information transfers for haptic stimuli. Based on the present study, it is recommended that 2 stiffness or force-magnitude levels (i.e., high and low) be used with haptic glyphs in a data visualization system, with an additional third level (medium) for more experienced users. Refereed other

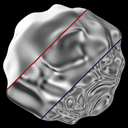

Cholewiak, S. A. & Küçükoğlu, G. (2015, May). Reflections of the environment distort perceived 3D shape. Visual Sciences Society (VSS) Annual Meeting 2015 Demo Night.

AbstractWe will showcase how a specular object’s image is dependent upon the way the reflected environment interacts with the the object’s geometry and how its perceived shape depends upon motion and the frequency content of the environment. Demos include perceived non-rigid deformation of shape and changes in material percept.

Cholewiak, S. A. & Küçükoğlu, G. (2014, May). What happens to a shiny 3D object in a rotating environment? Visual Sciences Society (VSS) Annual Meeting 2014 Demo Night.

AbstractA mirrored object reflects a distorted world. The distortions depend on the object's surface and act as points of correspondence when it moves. We demonstrate how the perceived speed of a rotating mirrored object is affected by rotation of the environment and present an interesting case of perceived non-rigid deformation.

Cholewiak, S. A. (2012). The tipping point: Visual estimation of the physical stability of three-dimensional objects (Unpublished doctoral dissertation). Rutgers University, New Brunswick, NJ, USA.

AbstractVision research generally focuses on the currently visible surface properties of objects, such as color, texture, luminance, orientation, and shape. In addition, however, observers can also visually predict the physical behavior of objects, which often requires inferring the action of hidden forces, such as gravity and support relations. One of the main conclusions from the naive physics literature is that people often have inaccurate physical intuitions; however, more recent research has shown that with dynamic simulated displays, observers can correctly infer physical forces (e.g., timing hand movements to catch a falling ball correctly takes into account Newton’s laws of motion). One ecologically important judgment about physical objects is whether they are physically stable or not. This research project examines how people perceive physical stability and addresses (1) How do visual estimates of stability compare to physical predictions? Can observers track the influence of specific shape manipulations on object stability? (2) Can observers match stability across objects with different shapes? How is the overall stability of an object estimated? (3) Are visual estimates of object stability subject to adaptation effects? Is stability a perceptual variable? The experimental findings indicate that: (1) Observers are able to judge the stability of objects quite well and are close to the physical predictions on average. They can track how changing a shape will affect the physical stability; however, the perceptual influence is slightly smaller than physically predicted. (2) Observers can match the stabilities of objects with different three-dimensional shapes -- suggesting that object stability is a unitary dimension -- and their judgments of overall stability are strongly biased towards the minimum critical angle. (3) The majority of observers exhibited a stability adaptation aftereffect, providing evidence in support of the claim that stability may be a perceptual variable.

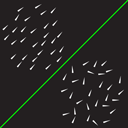

Cholewiak, S. A. (2010). Perceptual estimation of variance in orientation and its dependence on sample size (Unpublished masters thesis). Rutgers University, New Brunswick, NJ, USA.

AbstractRecent research has shown that participants are very good at perceptually estimating summary statistics of sets of similar objects (e.g., Ariely, 2001; Chong & Treisman, 2003; 2005). While the research has focused on first-order statistics (e.g., the mean size of a set of discs), it is unlikely that a mental representation of the world includes only a list of mean estimates (or expected values) of various attributes. Therefore, a comprehensive theory of perceptual summary statistics would be incomplete without an investigation of the representation of second-order statistics (i.e., variance). Two experiments were conducted to test participants' ability to discriminate samples that differed in orientation variability. Discrimination thresholds and points of subjective equality for displays of oriented triangles were measured in Experiment 1. The results indicated that participants could discriminate variance without bias and that participant sensitivity (measured via relative thresholds, i.e., Weber fractions) was dependent upon sample size but not baseline variance. Experiment 2 investigated whether participants used a simpler second-order statistic, namely, sample range to discriminate dispersion in orientation. The results of Experiment 2 showed that variance was a much better predictor of performance than sample range. Taken together, the experiments suggest that variance information is part of the visual system's representation of scene variables. However, unlike the estimation of first-order statistics, the estimation of variance depends crucially on sample size.

Cholewiak, R. W., & Cholewiak, S. A. (2010). Cutaneous perception. Encyclopedia of Perception. In E. B. Goldstein (Ed.), (Vol. 1, pp. 343–348). SAGE.

Cholewiak, R. W., & Cholewiak, S. A. (2010). Pain: Physiological mechanisms. Encyclopedia of Perception. In E. B. Goldstein (Ed.), (Vol. 1, pp. 722–726). SAGE.

Refereed conference talks

Cholewiak, S. A., Vergne, R., Kunsberg, B., Zucker, S. W., & Fleming, R. W. (2015, May). Appearance controls interpretation of orientation flows for 3D shape estimation. Computational and Mathematical Models in Vision (MODVIS) Annual Meeting 2015.

AbstractThe visual system can infer 3D shape from orientation flows arising from both texture and shading patterns. However, these two types of flows provide fundamentally different information about surface structure. Texture flows, when derived from distinct elements, mainly signal first-order features (surface slant), whereas shading flow orientations primarily relate to second-order surface properties (the change in surface slant). The source of an image's structure is inherently ambiguous, it is therefore crucial for the brain to identify whether flow patterns originate from texture or shading to correctly infer shape from a 2D image. One possible approach would be to use 'surface appearance' (e.g. smooth gradients vs. fine-scale texture) to distinguish texture from shading. However, the structure of the flow fields themselves may indicate whether a given flow is more likely due to first- or second-order shape information. We test these two possibilities in this set of experiments, looking at speeded and free responses.

Cholewiak, S. A., Kunsberg, B., Zucker, S., & Fleming, R. W. (2014, May). Predicting 3D shape perception from shading and texture flows. Journal of Vision 2014;14(10):1113. doi: 10.1167/14.10.1113

AbstractPerceiving 3D shape involves processing and combining different cues, including texture, shading, and specular reflections. We have previously shown that orientation flows produced by the various cues provide fundamentally different information about shape, leading to complementary strengths and weaknesses (see Cholewiak & Fleming, VSS 2013). An important consequence of this is that a given shape may appear different, depending on whether it is shaded or textured, because the different cues reveal different shape features. Here we sought to predict specific regions of interest (ROIs) within shapes where the different cues lead to better or worse shape perception. Since the predictions were derived from the orientation flows, our analysis provides a key test of how and when the visual system uses orientation flows to estimate shape. We used a gauge figure experiment to evaluate shape perception. Cues included Lambertian shading, isotropic 3D texture, both shading and texture, and pseudo-shaded depth maps. Participant performance was compared to a number of image and scene-based perceptual performance predictors. Shape from texture ROI models included theories incorporating the surface's slant and tilt, second-order partial derivatives (i.e., change in tilt direction), and tangential and normal curvatures of isotropic texture orientation. Shape from shading ROI models included image based metrics (e.g., brightness gradient change), anisotropy of the second fundamental form, and surface derivatives. The results confirm that individually texture and shading are not diagnostic of object shape for all locations, but local performance correlates well with ROIs predicted by first and second-order properties of shape. The perceptual ROIs for texture and shading were well predicted via the mathematical models. In regions that were ROI for both cues, shading and texture performed complementary functions, suggesting that a common front-end based on orientation flows can predict both strengths and weaknesses of different cues at a local scale.

Fleming, R. W. & Cholewiak, S. A. (2014, May). The dark secrets of dirty concavities. Journal of Vision 2014;14(10):1317. doi: 10.1167/14.10.1317

AbstractCracks, crevices and other surface concavities are typically dark places where both dirt and shadows tend to get trapped. By contrast, convex features are exposed to light and often get buffed a lighter or more glossy shade through contact with other surfaces. This means that in many cases, for complex surface geometries, shading and pigmentation are spatially correlated with one another, with dark concavities that are dimly illuminated and lighter convexities, which are more brightly shaded. How does the visual system distinguish between pigmentation and shadows when the two are spatially correlated? We performed a statistical analysis of complex rough surfaces under illumination conditions that varied parametrically from highly directional to highly diffuse in order to characterise the relationships between shading, illumination and shape. Whereas classical shape from shading analyses relate image intensities to surface orientations and depths, here, we find that intensity information also carries important additional cues to surface curvature. By shifting the phase of dark portions of the image relative to the surface geometry, we show that the visual system uses these relationships between curvatures and intensities to distinguish between shadows and pigmentation. Interestingly, we also find that the visual system is remarkably good at separating pigmentation and shadows even when they are highly correlated with one another, as long as the illumination conditions provide subtle local image orientation cues to distinguish the two. Together, these findings provide key novel constraints on computational models of human shape from shading and lightness perception.

Cholewiak, S. A., Fleming, R. W., & Singh, M. (2014, May). Visually judged physical stability and center of mass of 3D objects. Computational and Mathematical Models in Vision (MODVIS) Annual Meeting 2014.

AbstractHumans can judge from vision alone whether an object is physically stable or is likely to fall. This ability allows us to predict the physical behavior of objects, and hence to guide motor actions. We examined observers’ ability to visually estimate the physical stability of 3D objects (conical frustums of varying shapes and sizes), and how these relate to their estimates of center of mass (COM). In two experiments, observers stereoscopically viewed a rendered scene containing a table and a 3D object placed near the table’s edge. In the first experiment, they adjusted the tilt of the object to set its critical angle -- where the object is equally likely to fall off vs. return to its upright stable position. In the second experiment, observers localized the objects’ COM. In both experiments, observers’ performance was compared to physical predictions based on the objects’ geometry. Although deviations from physical predictions in both tasks were relatively small, more detailed analyses of individual performance in the two tasks revealed mutual inconsistencies between the critical angle and COM settings. These suggest that observers may not be using their COM estimates in the physically correct manner to make judgments of physical stability.

Cholewiak, S. A., Kunsberg, B., Zucker, S., & Fleming, R. W. (2014, March). Perceptual regions of interest for 3D shape derived from shading and texture flows. Tagung experimentell arbeitender Psychologen (TeaP, Conference of Experimental Psychologists) Annual Meeting 2014.

AbstractPerceiving 3D shape from shading and texture requires combining different, but complimentary, information about shape features extracted from 2D images. Here, we sought to predict specific regions of interest (ROIs) within images – derived from orientation flows – where each cue leads to locally better or worse shape perception. This analysis assesses whether the visual system uses orientation flows to estimate shape. A gauge figure experiment was used to evaluate shape perception for 3D objects with Lambertian shading, isotropic texture, both shading and texture, and pseudo-shaded depth maps. Participant performance was compared to image and scene-based perceptual predictors. Shape from texture ROI models incorporated surface slant and tilt, second order partial derivatives, and tangential and normal curvatures of texture orientation. Shape from shading ROI models included image based metrics, anisotropy of the second fundamental form, and surface derivatives. Results confirmed that, individually, texture and shading are not diagnostic of object shape for all locations, but local performance correlates well with ROIs predicted by first and second order shape properties. In regions that were ROI for both cues, shading and texture performed complementary functions, suggesting a common front-end based on orientation flows locally predicts both strengths and weaknesses of cues.

Fleming, R. W. & Cholewiak, S. A. (2014, March). Visually disentangling shading and surface pigmentation when the two are correlated. Tagung experimentell arbeitender Psychologen (TeaP, Conference of Experimental Psychologists) Annual Meeting 2014.

AbstractMany surfaces, such as weathered rocks or tree-bark, have complex 3D relief, featuring cracks, bumps and ridges. It is not uncommon for dirt to accumulate in the concavities, which is also where shadows are most likely to occur. By contrast, convex features are exposed to light and often get buffed a lighter shade. Thus, shading and pigmentation are often spatially correlated with one another, making it computationally difficult to distinguish them. How does the visual system separate shading from pigmentation, when they are correlated? We performed a statistical analysis of complex rough surfaces under directional and diffuse illumination to characterise the relationships between shading, illumination and shape. We find that image intensities carry important information about surface curvatures, and show that the visual system uses the relationships between curvatures and intensities to distinguish between shadows and pigmentation. We also find that subjects are remarkably good at separating shadows and pigmentation even when they are highly correlated. Together, these findings provide key novel constraints on computational models of human shape from shading and lightness perception.

Cholewiak, S. A., Singh, M., & Fleming, R. (2011). Perception of physical stability of asymmetrical three-dimensional objects. Journal of Vision, 11(11), 44. doi: 10.1167/11.11.44

AbstractVisual estimation of object stability is an ecologically important judgment that allows observers to predict an object's physical behavior. One way to measure perceived stability is by estimating the ‘critical angle’ (relative to upright) at which an object is perceived to be equally likely to fall over versus return to its upright position. However, for asymmetrical objects, the critical angle varies with the direction in which the object is tilted. Here we ask: (1) Can observers reliably track the change in critical angle as a function of tilt direction? (2) How do observers visually estimate the overall stability of an object, given the different critical angles in various directions? Observers stereoscopically viewed a rendered scene containing a slanted conical frustum, with variable aspect ratio, sitting on a table. In Exp. 1, the object was placed near the edge of the table, and rotated through one of six angles relative to this edge. Observers adjusted its tilt angle (constrained to move directly toward the edge) in order to set the critical angle. We found that their settings tracked the variation in critical angle with tilt direction remarkably well. In Exp. 2, observers viewed on each trial one of the slanted frustums from Exp. 1, along with a cylindrical object of variable aspect ratio. They adjusted the aspect ratio of the cylinder in order to match the perceived stability of the slanted frustum. The results showed that on average observers' estimates of overall stability are well predicted by the minimum critical angle across all tilt directions, but not by the mean critical angle. Observers thus reasonably appear to use the critical angle in the least stable direction in order to estimate the overall stability of an asymmetrical object. Refereed conference posters

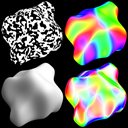

Cholewiak, S. A., Vergne, R., Kunsberg, B., Zucker, S. W., & Fleming, R. W. (2015, May). Distinguishing between texture and shading flows for 3D shape estimation. Visual Sciences Society (VSS) Annual Meeting 2015.

AbstractThe visual system can infer 3D shape from orientation flows arising from both texture and shading patterns. However, these two types of flows provide fundamentally different information about surface structure. Texture flows, when derived from distinct elements, mainly signal first-order features (surface slant), whereas shading flow orientations primarily relate to second-order surface properties. It is therefore crucial for the brain to identify whether flow patterns originate from shading or texture to correctly infer shape. One possible approach would be to use 'surface appearance' (e.g. smooth gradients vs. fine-scale texture) to distinguish texture from shading. However, the structure of the flow fields themselves may indicate whether a given flow is more likely due to first- or second-order shape information. Here we test these two possibilities. We generated irregular objects ('blobs') using sinusoidal perturbations of spheres. We then derived two new objects from each blob: One whose shading flow matched the original object's texture flow, and another whose texture flow matched the original's shading flow. Using high and low frequency environment maps to render the surfaces, we were able to manipulate surface appearance independently from the flow structure. This provided a critical test of how appearance interacts with orientation flow when estimating 3D shape and revealed some striking illusions of shape. In an adjustment task, observers matched the perceived shape of a standard object to each comparison object by morphing between the generated shape surfaces. In a 2AFC task, observers were shown two manipulations for each blob and indicated which one matched a briefly flashed standard. Performance was compared to an orientation flow based model and confirmed that observers' judgments agreed with texture and shading flow predictions. Both the structure of the flow and overall appearance are important for shape perception, but appearance cues determine the inferred source of the observed flowfield.

Mazzarella, J. E., Cholewiak, S. A., Phillips, F., & Fleming, R. W. (2014, May). Limits on the estimation of shape from specular surfaces. Journal of Vision 2014;14(10):721. doi: 10.1167/14.10.721

AbstractHumans are generally remarkably good at inferring 3D shape from distorted patterns of reflections on mirror-like objects (Fleming et al, 2004). However, there are conditions in which shape perception fails (complex planar reliefs under certain illuminations; Faisman and Langer, 2013). A good theory of shape perception should predict failures as well as successes of shape perception, so here we sought to map out systematically the conditions under which subjects fail to estimate shape from specular reflections and to understand why. To do this, we parametrically varied the spatial complexity (spatial frequency content) of both 3D relief and illumination, and measured under which conditions subjects could and could not infer shape. Specifically, we simulated surface reliefs with varying spatial frequency content and rendered them as perfect mirrors under spherical harmonic light probes with varying frequency content. Participants viewed the mirror-like surfaces and performed a depth-discrimination task. On each trial, the participants’ task was to indicate which of two locations on the surface—selected randomly from a range of relative depth differences on the object’s surface—was higher in depth. We mapped out performance as a function of both the relief and the lighting parameters. Results show that while participants were accurate within a given range for each manipulation, there also existed a range of spatial frequencies – namely very high and low frequencies – where participants could not estimate surface shape. Congruent with previous research, people were able to readily determine 3D shape using the information provided by specular reflections; however, performance was highly dependent upon surface and environment complexities. Image analysis reveals the specific conditions that subjects rely on to perform the task, explaining the pattern of errors.

Mazzarella, J. E., Cholewiak, S. A., Phillips, F., & Fleming, R. W. (2014, April). Effects of varied spatial scale on perception of shape from shiny surfaces. Tagung experimentell arbeitender Psychologen (TeaP, Conference of Experimental Psychologists) Annual Meeting 2014.

AbstractHumans are generally remarkably good at inferring 3D shape from distorted reflection patterns on mirror-like objects (Fleming et al, 2004). However, in certain conditions shape perception fails (complex planar reliefs under certain illuminations; Faisman and Langer, 2013). Here we map out systematically those conditions under which subjects fail to estimate shape from specular reflections. Specifically, we simulated surface reliefs with varying spatial frequency content and rendered them as perfect mirrors under spherical harmonic light probes with varying frequency content. The participants performed a depth discrimination task, in which they indicated which of two locations on the surface—selected randomly from a range of relative depth differences on the object’s surface—was higher in depth. Congruent with previous research, subjects were able to readily determine 3D shape using the information provided by specular reflections; however, performance was highly dependent upon surface and environment complexities. Results show that while participants were accurate within a given range for each manipulation, there also existed a range of spatial frequencies – namely very high and low frequencies – where participants could not estimate surface shape. Image analysis reveals the specific conditions that subjects rely on to perform the task, explaining the pattern of errors.

Cholewiak, S. A. & Fleming, R. (2013). Towards a unified explanation of shape from shading and texture. Journal of Vision, 13(9), 258. doi: 10.1167/13.9.258

AbstractThe estimation of 3D shape from 2D images requires processing and combining many cues, including texture, shading, specular highlights and reflections. Previous research has shown that oriented filter responses ('orientation fields') may be used to perceptually reconstruct the surface structure of textured and shaded 3D objects. However, texture and shading provide fundamentally different information about 3D shape -- texture provides information about surface orientations (which depend on the first derivative of the surface depth) while shading provides information about surface curvatures (which depend on higher derivatives). In this research project, we used specific geometric transformations that preserve the informativeness of one cue's orientation fields while disturbing the other cue’s orientation fields to investigate whether oriented filter responses predict the observed strengths and weaknesses of texture and shading cues for 3D shape perception. In the first experiment, a 3D object was matched to two comparison objects, one with identical geometry and another with a subtly different pattern of surface curvatures. This transformation alters second derivatives of the surface while preserving first derivatives, so changes in the orientation fields predict higher detectability for shaded objects. This was reflected in participants' judgments and model performance. In the second experiment, observers matched the perceived shear of two objects. This transformation alters first derivatives but preserves second derivatives. Therefore, changes in the orientation fields predicted a stronger effect on the perceived shape for textured objects, which was reflected in participant and model performance. These results support a common front-end -- based on orientation fields -- that accounts for the complementary strengths and weaknesses of texture and shading cues. Neither cue is fully diagnostic of object shape under all circumstances, and neither cue is 'better' than the other in all situations. Instead, the model provides a unified account of the conditions in which cues succeed and fail.

Cholewiak, S. A., Singh, M., & Fleming, R. (2012). Visual adaptation to physical stability of objects. Journal of Vision, 12(9), 304. doi: 10.1167/12.9.304

AbstractPhysical stability is an ecologically important judgment about objects that allows observers to predict object behavior and appropriately guide motor actions to interact with them. Moreover, it is an attribute that observers can estimate from vision alone, based on an object's shape. In previous work, we have used different tasks to investigate perceived object stability, including estimation of critical angle of tilt and matching stability across objects with different shapes (VSS 2010, 2011). The ability to perform these tasks, however, does not necessarily indicate that object stability is a natural perceptual dimension. We asked whether it is possible to obtain adaptation aftereffects with perceived object stability. Does being exposed to a sequence of highly stable (bottom-heavy) objects make a test object appear less stable (and vice versa)? Our test objects were vertically elongated shapes, with a flat base and a vertical axis of symmetry. Its axis could be curved to different degrees, while keep its base horizontal. The Psi adaptive procedure (Kontsevich & Tyler, 1999) was used to estimate the "critical curvature" - the minimum axis curvature for which the test object is judged to fall over. This was estimated in three conditions: baseline (no adaptation), post-adaptation with high-stability shapes (mean critical angle: 63.5 degrees), and post-adaptation with low-stability shapes (mean critical angle: 16.7 degrees). In the adaptation conditions, observers viewed a sequence of 40 distinct adapting shapes. Following this, a test shape was shown, and observers indicated whether the object would stay upright or fall over. Adaptation was "topped up" after every test shape. Most observers exhibited an adaptation to object stability: the estimated critical curvature was lower following adaptation to high-stability shapes, and higher following adaptation to low-stability shapes. The results suggest that object stability may indeed be a natural perceptual variable.

Cholewiak, S. A., Fleming, R. W., & Singh, M. (2011). On the edge: Perceived stability and center of mass of 3D objects. Perception, 40, ECVP Abstract Supplement, 101. doi: 10.1068/v110347

AbstractVisual estimation of object stability is an ecologically important judgment that allows observers to predict objects' physical behavior. Previously we have used the 'critical angle' of tilt to measure perceived object stability (VSS 2010; VSS 2011). The current study uses a different paradigm—measuring the critical extent to which an object can "stick out" over a precipitous edge before falling off. Observers stereoscopically viewed a rendered scene containing a 3D object placed near a table’s edge. Objects were slanted conical frustums that varied in their slant angle, aspect ratio, and direction of slant (pointing toward/away from the edge). In the stability task, observers adjusted the horizontal position of the object relative to the precipitous edge until it was perceived to be in unstable equilibrium. In the CoM task, they adjusted the height of a small ball probe to indicate perceived CoM. Results exhibited a significant effect of all three variables on stability judgments. Observers underestimated stability for frustums slanting away from the edge, and overestimated stability when slanting toward it, suggesting a bias toward the center of the supporting base. However, performance was close to veridical in the CoM task—providing further evidence of mutual inconsistency between perceived stability and CoM judgments.

Kibbe, M. M., Kim, S.-H., Cholewiak, S. A., & Denisova, K. (2011). Curvature aftereffect and visual-haptic interactions in simulated environments. Journal of Vision, 11(11), 784. doi: 10.1167/11.11.784

AbstractRepeated haptic exploration of a surface with curvature results in an adaptation effect, such that flat surfaces feel curved in the opposite direction of the explored surface. Previous studies used real objects and involved contact of skin on surface with no visual feedback. To what extent do cutaneous, proprioceptive, and visual cues play a role in the neural representation of surface curvature? The current study used a Personal Haptic Interface Mechanism (PHANToM) force-feedback device to simulate physical objects that subjects could explore with a stylus. If haptic aftereffect is observed in exploration of virtual surfaces, it suggests neural representations of curvature based solely on proprioceptive input. If visual input plays a role in the absence of haptic convexity/concavity, it would provide evidence for a visual input to the neural haptic representation. Method. Baseline curvature discrimination was obtained from subjects who explored a virtual surface with the stylus and reported whether it was concave or convex. In Experiment 1, subjects adapted to a concave or convex curvature (+-3.2 m−1) and reported the curvature of a test surface (ranging from −1.6 m^−1 to 1.6 m^−1). In Experiment 2, subjects adapted with their left hands and tested with their right (intermanually). In Experiment 3, subjects were given visual feedback on a computer screen that the trajectory of the stylus tip was a curved surface, while the haptic surface was flat. Results. In Experiment 1, subjects showed a strong curvature aftereffect, indicating that proprioceptive input alone is sufficient. Subjects in Experiment 2 showed weaker but significant adaptation, indicating a robust neural representation across hands. No aftereffect was found with solely visual curvature input in Experiment 3, suggesting that the neural representation is not affected by synchronized visual feedback, at least when two modalities do not agree. Implications for visual-haptic representations will be discussed.

Pantelis, P., Cholewiak, S. A., Ringstad, P., Sanik, K., Weinstein, A., Wu, C.-C., & Feldman, J. (2011). Perceiving intelligent action: Experiments in the interpretation of intentional motion. In IGERT 2011 poster competition.

AbstractA remarkable characteristic of human perceptual systems is the ability to recognize the goals and intentions of other living things – "intelligent agents" – on the basis of their actions or patterns of motion. We use this ability to anticipate the behavior of agents in the environment, and better inform our decision making. The aim of this project is to develop a theoretical model of the perception of intentions, shedding light onto both the function of the human (biological) perceptual system, and the design of computational models that drive artificial systems (robots). To this end, an interdisciplinary group of IGERT students created a novel virtual environment populated by intelligent autonomous agents, and endowed these agents with human-like capacities: goals, real-time perception, memory, planning, and decision making. One phase of the project focused on the perceptual judgments of human observers watching the agents. Experimental subjects’ judgments of the agents’ intentions were accurate and in agreement with one another. In another experiment, the agents were programmed to evolve through many "generations" as they competed for "food" and survival within a game-like framework. We examined whether the ability of human observers to classify and interpret the intentions of agents improved as the behavior of successive agent generations became more optimal. Our results show that (a) dynamic virtual environments can be developed that embody the essential perceptual cues to the intentions of intelligent agents, and (b) when studied in such environments, human perception of the intentions of intelligent agents proves to be accurate, rational and rule-governed.

Pantelis, P., Cholewiak, S. A., Ringstad, P., Sanik, K., Weinstein, A., Wu, C.-C., & Feldman, J. (2011). Perception of intentions and mental states in autonomous virtual agents. Journal of Vision, 11(11), 733. doi: 10.1167/11.11.733

AbstractComprehension of goal-directed, intentional motion is an important but understudied visual function. To study it, we created a two-dimensional virtual environment populated by independently-programmed autonomous virtual agents. These agents (depicted as oriented triangles) navigate the environment, playing a game with a simple goal (collecting "food" and bringing it back to a cache location). The agents' behavior is controlled by a small number of distinct states or subgoals–including exploring, gathering food, attacking, and fleeing–which can be thought of as "mental" states. Our subjects watched short vignettes of a small number of agents interacting. We studied their ability to detect and classify agents' mental states on the basis of their motions and interactions. In one version of our experiment, the four mental states were explicitly explained, and subjects were asked to continually classify one target agent with respect to these states, via keypresses. At each point in time, we were able to compare subjects' responses to the "ground truth" (the actual state of the target agent at that time). Although the internal state of the target agent is inherently hidden and can only be inferred, subjects reliably classified it: "ground truth" accuracy was 52%–more than twice chance performance. Interestingly, the percentage of time when subjects' responses were in agreement with one another (63%) was higher than accuracy with respect to "ground truth." In a second experiment, we allowed subjects to invent their own behavioral categories based on sample vignettes, without being told the nature (or even the number) of distinct states in the agents' actual programming. Even under this condition, the number of perceived state transitions was strongly correlated with the number of actual transitions made by a target agent. Our methods facilitate a rigorous and more comprehensive study of the "psychophysics of intention." For details and demos, see http://ruccs.rutgers.edu/~jacob/demos/imps/

Cholewiak, S. A., Pantelis, P., Ringstad, P., Sanik, K., Wu, C.-C., & Feldman, J. (2010b, May). Living within a virtual environment populated by intelligent autonomous agents. In NSF IGERT 2010 Project Meeting. Washington, DC.

Cholewiak, S. A., Singh, M., Fleming, R., & Pastakia, B. (2010). The perception of physical stability of 3D objects: The role of parts. Journal of Vision, 10(7), 77. doi: 10.1167/10.7.77

AbstractResearch on 3D shape has focused largely on the perception of local geometric properties, such as surface depth, orientation, or curvature. Relatively little is known about how the visual system organizes local measurements into global shape representations. Here, we investigated how the perceptual organization of shape affects the perception of physical stability of 3D objects. Estimating stability is important for predicting object behavior and guiding motor actions, and requires the observer to integrate information from the entire object. Observers stereoscopically viewed a rendered scene containing a 3D shape placed near the edge of a table. They adjusted the tilt of the object over the edge to set its perceived critical angle, i.e., the angle at which the object is equally likely to fall off the table vs. return to its upright position. The shapes were conical frustums with one of three aspect ratios—either by themselves, or with a part protruding from the side. When present, the boundaries between the part and the frustum were either sharp or smooth. Importantly, the part either faced directly toward the edge of the table or directly away from it. Observers were close to the physical prediction for tall/narrow shapes, but with decreasing aspect ratio (shorter/wider shapes), there was a tendency to underestimate the critical angle. With this bias factored out, we found that errors were mostly positive when the part faced toward the table's edge, and mostly negative when facing the opposite direction. These results are consistent with observers underestimating the physical contribution of the attached part. Thus, in making judgments of physical stability observers tend to down-weight the influence of attached part—consistent with a robust-statistics approach to determining the influence of a part on global visual estimates (Cohen & Singh, 2006; Cohen et al., 2008).

Cholewiak, S. A., & Singh, M. (2009). Perceptual estimation of variance in orientation and its dependence on sample size. Journal of Vision, 9(8), 1019. doi: 10.1167/9.8.1019

AbstractPrevious research on statistical perception has shown that subjects are very good at perceptually estimating first-order statistical properties of sets of similar objects (such as the mean size of a set of disks). However, it is unlikely that our mental representation of the world includes only a list of mean estimates of various attributes. Work on motor and perceptual decisions, for example, suggests that observers are implicitly aware of their own motor / perceptual uncertainty, and are able to combine it with an experimenter-specified loss function in a near-optimal manner. The current study investigated the representation of variance by measuring difference thresholds for orientation variance of sets of narrow isosceles triangles with relatively large Standard Deviations (SD): 10, 20, 30 degrees; and for different sample sizes (N): 10, 20, 30 samples. Experimental displays consisted of multiple triangles whose orientations were specified by a von Mises distribution. Observers were tested in a 2IFC task in which one display had a base SD, and the other, test, display had a SD equal to +/−10, +/−30, +/−50, and +/−70% of the base SD. Observers indicated which interval had higher orientation variance. Psychometric curves were fitted to observer responses and difference thresholds were computed for the 9 conditions. The results showed that observers can estimate variance in orientation with essentially no bias. Although observers are thus clearly sensitive to variance, their sensitivity is not as high as for the mean. The relative thresholds (difference threshold SD / base SD) exhibited little dependence on base SD, but increased greatly (from ∼20% to 40%) as sample size decreased from 30 to 10. Comparing the σ of the cumulative normal fits to the standard error of SD, we found that the estimated σ's were on average about 3 times larger than the corresponding standard errors.

Cholewiak, S. A., & Tan, H. Z. (2007, November). Haptic stiffness identification and information transfer. In Abstracts of the Psychonomic Society, 48th Annual Meeting (p. 83). Long Beach, CA.

AbstractThis experiment investigated static information transfer (IT) in a stiffness identification experiment. Past research on stiffness perception has only measured the Weber fractions. In many applications where haptic virtual environments are used for data perceptualization, both the ability to discriminate stiffness (Weber fraction) and the number of correctly identifiable stiffness levels (2^IT) are important for selecting rendering parameters. Ten participants were asked to tap a virtual surface vertically using a customdesigned haptic force-feedback device and identify the stiffness level. Five stiffness values in the range 0.2 to 3.0N/mm were used. The virtual surface was modeled as a linear elastic spring and exerted an upward resistive force equaling the product of stiffness and penetration depth whenever it was penetrated. A total of 250 trials were collected per participant. The average static IT was 1.57 bits, indicating that participants were able to correctly identify about three stiffness levels. Other conference presentations

Cholewiak, S. A., Kunsberg, B., Zucker, S. W. & Fleming, R. W. (2014, October). Texture and shading flows predict performance in first and second order 3D shape tasks. In PRISM4: Perceptual Representation of Illumination, Shape and Materials. Ankara, Turkey.

Pantelis, P., Cholewiak, S. A. Gerstner, T., Kharkwal, G., Sanik, K., Weinstein, A., Wu, C.-C., & Jacob Feldman (2013, May). Evolving virtual autonomous agents for experiments in intentional reasoning. In 7th Annual Rutgers Perceptual Science Forum. New Brunswick, NJ.

Cholewiak, S. A., Singh, M., & Fleming, R. (2012, May). Visual adaptation to physical stability of objects. In 6th Annual Rutgers Perceptual Science Forum. New Brunswick, NJ.

Kibbe, M. M., Kim, S.-H., Cholewiak, S. A., & Denisova, K. (2011, May). Curvature aftereffect and visual-haptic interactions in simulated environments. In 5th Annual Rutgers Perceptual Science Forum.

Cholewiak, S. A., Pantelis, P., Ringstad, P., Sanik, K., Wu, C.-C., & Feldman, J. (2010, May). Inferring the intention and mental state of evolved autonomous agents. In 4th Annual Rutgers Perceptual Science Forum. New Brunswick, NJ.

Cholewiak, S. A., Kim, S.-H., Ringstad, P., Wilder, J., & Singh, M. (2009, September). Weebles may wobble, but conical frustums fall down: Investigating perceived 3-D object stability. In 2nd Annual Rutgers Fall Cognitive Festival. New Brunswick, NJ.

Cholewiak, S. A., & Singh, M. (2008, September). Representation of variance in perceptual attributes. In 1st annual rutgers fall cognitive festival. New Brunswick, NJ.

Cholewiak, S. A., & Tan, H. Z. (2008, May). Haptic identification and information transfer of stiffness and force magnitude. In 2nd Annual Rutgers Perceptual Science Forum. New Brunswick, NJ.

Contributions acknowledged in:

Spröte, P. & Fleming, R. W. (2013). Concavities, negative parts, and the perception that shapes are complete. Journal of Vision, 13(14). doi: 10.1167/13.14.3

AbstractWhen we perceive the shape of an object, we can often make many other inferences about the object, derived from its shape. For example, when we look at a bitten apple, we perceive not only the local curvatures across the surface, but also that the shape of the bitten region was caused by forcefully removing a piece from the original shape (excision), leading to a salient concavity or negative part in the object. However, excision is not the only possible cause of concavities or negative parts in objects—for example, we do not perceive the spaces between the fingers of a hand to have been excised. Thus, in order to infer excision, it is not sufficient to identify concavities in a shape; some additional geometrical conditions must also be satisfied. Here, we studied the geometrical conditions under which subjects perceived objects as been bitten, as opposed to complete shapes. We created 2-D shapes by intersecting pairs of irregular hexagons and discarding the regions of overlap. Subjects rated the extent to which the resulting shapes appeared to be bitten or whole on a 10-point scale. We find that subjects were significantly above chance at identifying whether shapes were bitten or whole. Despite large intersubject differences in overall performance, subjects were surprisingly consistent in their judgments of shapes that had been bitten. We measured the extent to which various geometrical features predict subjects' judgments and find that the impression that an object is bitten is strongly correlated with the relative depth of the negative part. Finally, we discuss the relationship between excision and other perceptual organization processes such modal and amodal completion, and the inference of other attributes of objects, such as the material properties.

Witt, J.K. & Proffitt, D.R. (2007). Perceived slant: A dissociation between perception and action. Perception, 36, 249-257. doi: 10.1068/p5449

AbstractPrevious research has found performance for several egocentric tasks to be superior on physically large displays relative to smaller ones, even when visual angle is held constant. This finding is believed to be due to the more immersive nature of large displays. In our experiment, we examined if using a large display to learn a virtual environment (VE) would improve egocentric knowledge of the target locations. Participants learned the location of five targets by freely exploring a desktop large-scale VE of a city on either a small (25" diagonally) or large (72" diagonally) screen. Viewing distance was adjusted so that both displays subtended the same viewing angle. Knowledge of the environment was then assessed using a head-mounted display in virtual reality, by asking participants to stand at each target and paint at the other unseen targets. Angular pointing error was significantly lower when the environment was learned on a 72" display. Our results suggest that large displays are superior for learning a virtual environment and the advantages of learning an environment on a large display may transfer to navigation in the real world.

Bakdash, J. Z., Augustyn, J. S., and Proffitt, D. R. (2006). Large displays enhance spatial knowledge of a virtual environment. In ACM Siggraph Symposium on Applied Perception in Graphics and Visualization, 59-62. doi: 10.1145/1140491.1140503

AbstractPrevious research has found performance for several egocentric tasks to be superior on physically large displays relative to smaller ones, even when visual angle is held constant. This finding is believed to be due to the more immersive nature of large displays. In our experiment, we examined if using a large display to learn a virtual environment (VE) would improve egocentric knowledge of the target locations. Participants learned the location of five targets by freely exploring a desktop large-scale VE of a city on either a small (25" diagonally) or large (72" diagonally) screen. Viewing distance was adjusted so that both displays subtended the same viewing angle. Knowledge of the environment was then assessed using a head-mounted display in virtual reality, by asking participants to stand at each target and paint at the other unseen targets. Angular pointing error was significantly lower when the environment was learned on a 72" display. Our results suggest that large displays are superior for learning a virtual environment and the advantages of learning an environment on a large display may transfer to navigation in the real world.

Cholewiak, R. W. & McGrath, C. M. (2006). Vibrotactile targeting in multimodal systems: Accuracy and integration. [Poster Presentation at IEEE Haptics 2006 Symposium]. Arlington, VA: IEEE Computer Society. doi: 10.1109/HAPTICS.2006.182

AbstractTactile displays to enhance virtual and real environments are becoming increasingly common. Extending previous studies, we explored spatial localization of individual sites on a dense tactile array worn on the observer?s trunk, and their interaction with simultaneously-presented visual stimuli in an isomorphic display. Stimuli were composed of individual vibratory sites on the tactile array or projected flashes of light. In all cases, the task for the observer was to identify the location of the target stimulus, whose modality was defined for that session. In the multimodal experiment, observers were also required to identify the quality of a stimulus presented in the other modality (the "distractor"). Overall, performance was affected by the location of the target within the array. Having to identify the quality of a simultaneous stimulus in the other modality reduced both target accuracy and response time, but these effects did not seem to be a function of the relative location of the distractor.

Schnall, S. & Clore, G.L. (2004). Emergent meaning in affective space: Conceptual and spatial congruence produces positive evaluations. In Proceedings of the Twenty-Sixth Annual Conference of the Cognitive Science Society, 1209-1214.

AbstractBased on the theory of conceptual metaphor we investigated the evaluative consequences of a match (or mismatch) of different conceptual relations (good vs. bad; abstract vs. concrete) with their corresponding spatial relation (UP vs. DOWN). Good and bad words that were either abstract or concrete were presented in an up or down spatial location. Words for which the conceptual dimensions matched the spatial dimension were evaluated most favorably. When neither of the two conceptual dimensions matched the spatial dimension, ratings were not as favorable as when the dimensions did match, but were still significantly more favorable than when one conceptual category was matched with the spatial category (e.g., UP and abstract), while the other one was not (e.g., UP and bad). Results suggest that a metacognitive feeling of fluency can produce an additional layer of evaluative information that is independent of actual stimulus valence.

Cholewiak, R.W. & Collins, A.A. (2003). Vibrotactile localization on the arm: effects of place, space, and age. Perception and Psychophysics, 65, 1058-1077. doi: 10.3758/BF03194834

AbstractAlthough tactile acuity has been explored for touch stimuli, vibrotactile resolution on the skin has not. In the present experiments, we explored the ability to localize vibrotactile stimuli on a linear array of tactors on the forearm. We examined the influence of a number of stimulus parameters, including the frequency of the vibratory stimulus, the locations of the stimulus sites on the body relative to specific body references or landmarks, the proximity among driven loci, and the age of the observer. Stimulus frequency and age group showed much less of an effect on localization than was expected. The position of stimulus sites relative to body landmarks and the separation among sites exerted the strongest influence on localization accuracy, and these effects could be mimicked by introducing an "artificial" referent into the tactile array. |

|